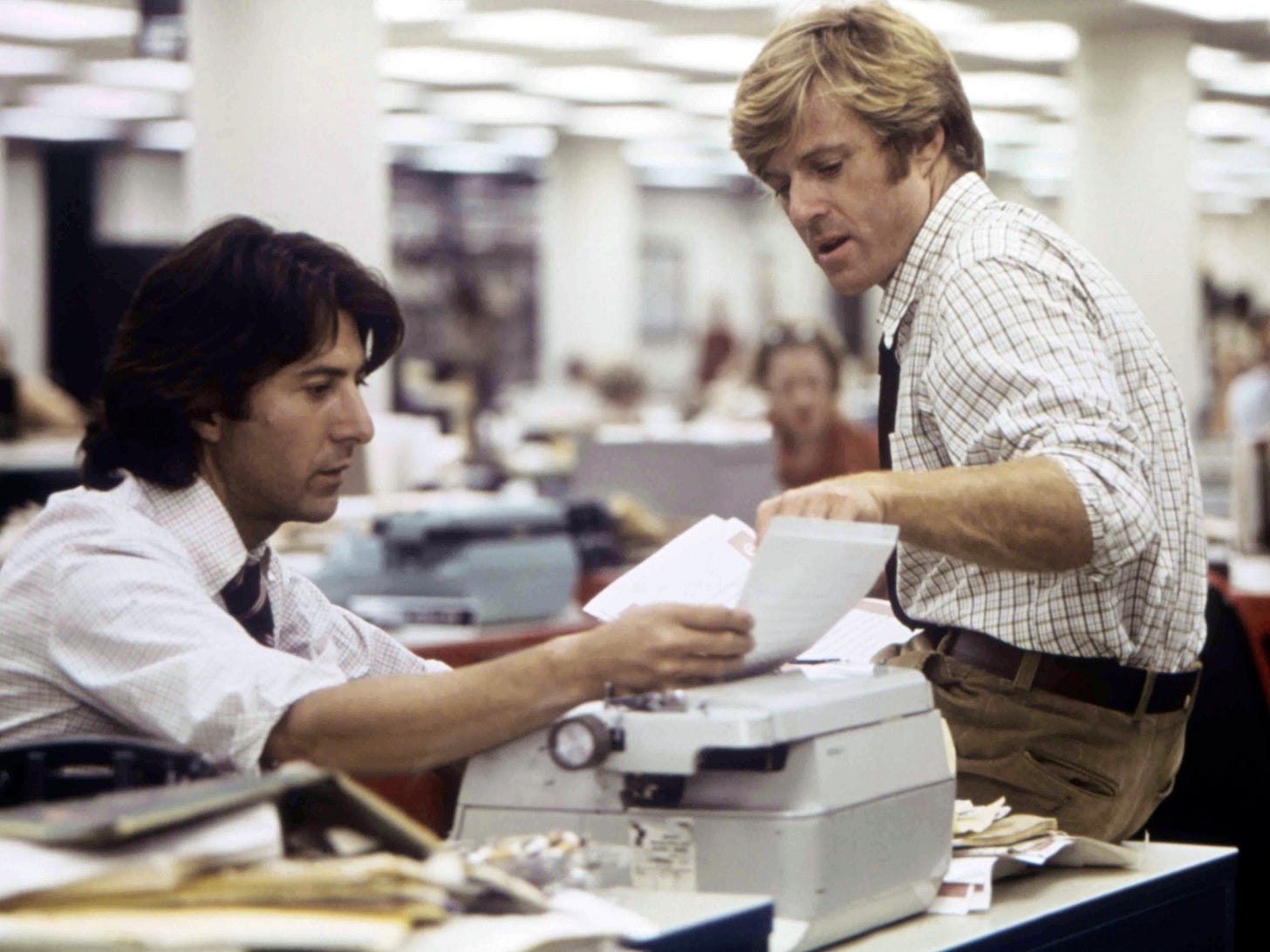

An AI Takeover of News? Not yet.

Humans will always be integral to the news business

As someone who spends most of his time working in the news business, I have been closely following the conversation around generative AI and the future of news, trying to figure out what I think. The era of AI is challenging my inclination to not offer opinions until all the facts are in.

However, given that the facts will not be “in” for a matter of years — and given that the conversation on AI and news is beginning to take shape (my favorite analysis so far is from the Reuters Institute), I thought I would dip my toes in.

My take: although AI is going to change the newsroom, human journalists aren’t going anywhere anytime fast. The reason: humans are irreplaceable in several of the key processes necessary to produce quality, fact-based journalism.

Take reporting, for example. An AI large language model (LLM) may be able to scan the internet for information and synthesize findings. However, AI cannot generate net new information — the kind of information gathered through old-fashioned shoe-leather reporting in communities. A world without reporters is a world with machines chewing up and spitting out versions of the same information over and over again.

Another example: fact-checking. Trust is the primary reason that readers choose a given publisher; it is, in effect, the driver of the news business model. LLM’s cannot determine fact from fiction - they can only predict the next word based on a sequence of previous words. Perhaps most important and most obvious: LLM’s “hallucinate” and regularly spit out incorrect information. Traditional AI can be trained to identify misinformation — the social media platforms rely on those models now. But the judgment at the center of what is true and what is not — the key driver of trust — must be human.

Moreover, there will always be a role for human writers. No matter how well prompts are eventually “engineered” — indeed, the New York Times is beginning to hire “prompt engineers” for generative AI — LLM’s do not produce writing of sufficient quality for the vast majority of stories. True, not every story needs to be as well-written as a story one would read in The Atlantic — but, by most accounts, LLM’s today do not write well enough to cover everyday topics, such as how my beloved Baltimore Orioles played last night. Perhaps one day they might — these models always improve. But so much of what makes good writing is the abstract “human creative touch” that AI will not be able to replicate.

My belief that human beings will continue to be at the center of journalism does not take away from the incredible promise offered by generative AI. Everyday newsroom tasks, including content management, will be greatly simplified. Generative AI can help a busy beat reporter with a quick first draft, freeing them up to produce more. It will eventually be able to alter the drafts of stories ever so slightly to target users based on their previous interests, making stories more worthy of clicks (a great business proposition, if not a bit fraught ethically).

Additionally, generative AI will create better experiences for readers, who will be able to manipulate the news they read into whatever format they prefer (text, video, audio). They can use generative AI to summarize stories and go deeper into topics of their choosing. They can ask for the news to be read to them in the voice of Tina Turner if they want (reports are that it’s ‘simply the best’). As quickly as consumers’ preferences change — and as quickly as generative AI evolves — there are sure to be new AI-driven products and services that appeal to wide swaths of readers.

However, as exciting as those possibilities are, quality journalism requires human beings. Alarmists arguing that AI will completely steamroll journalism are speaking too soon. For the foreseeable future, journalists themselves will still own “the first draft of history” — AI can only create subsequent ones.