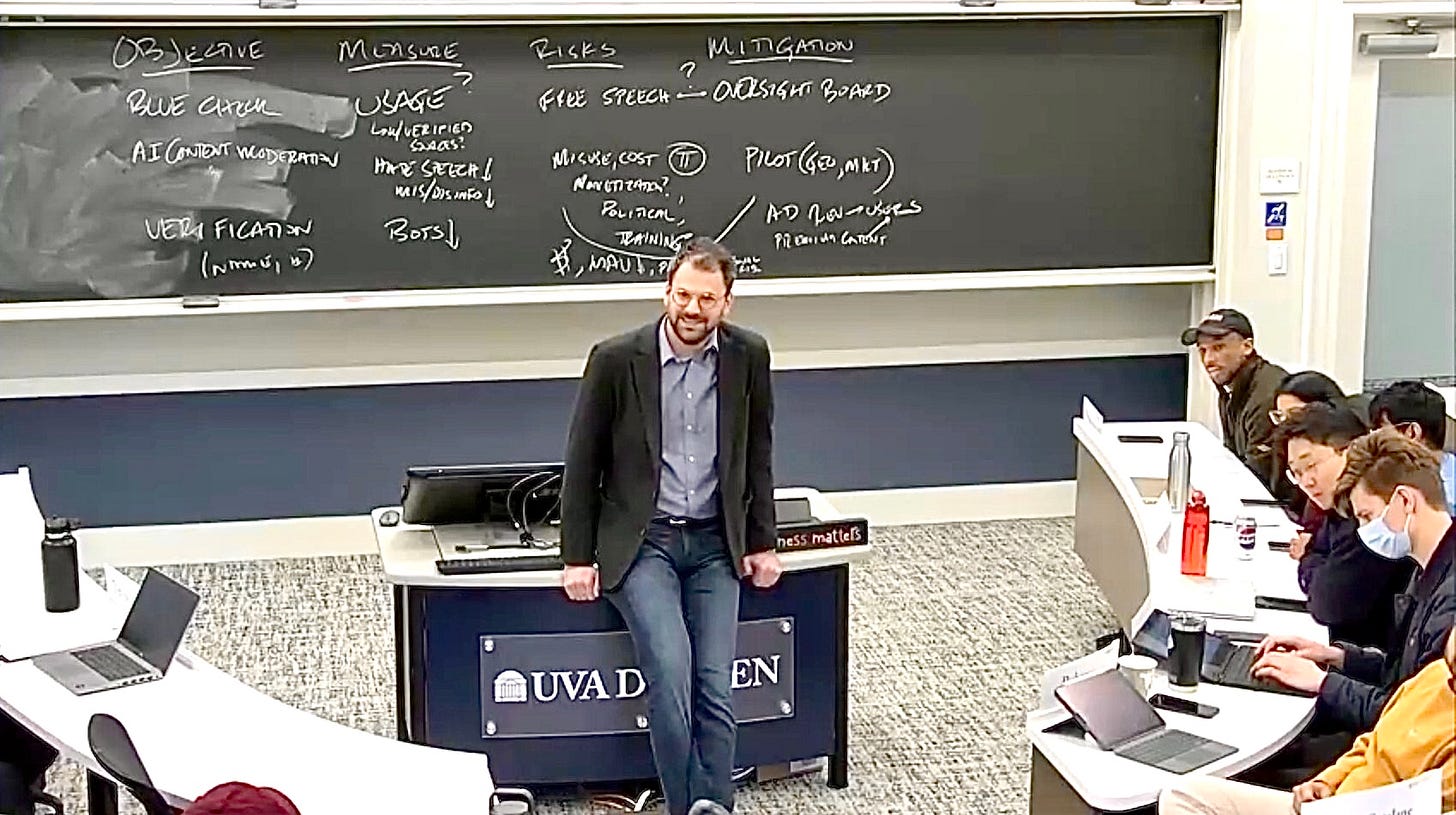

A belated “happy new year” to Ethical Technologist readers and “welcome” to our new subscribers. Apologies for my hiatus: I was focusing my energies for the first months of the year on the Darden classroom, where I was teaching my elective, “Technology and Ethics” with Professor Bidhan Parmar. Now that the course has concluded, I wanted to share some of my reflections on the term with you all.

The key takeaway: amidst all the doom and gloom around how the inevitable advance of AI is going to end human life as we know it, there is reason to hope that we will harness the promise of new technologies. That reason: the next generation of corporate managers wants to apply ethical reasoning to the challenges and opportunities presented by these technologies and believe their decisions will play a role in preventing a technological dystopia — and realizing the promise of AI.

Coming into this year’s class, I assumed, based on our experience in the previous school year, that one of our core responsibilities was to convince students to care about ethics in the first place. One of the main objections the Ethics faculty at Darden hears regularly is a Milton Friedman-ite critique - that ethical considerations are a cost that risks compromising share price, the only metric businesses should care about. Applied to technology, that argument sounds like “move fast and break things,” or, more technically, acquire additional users no matter the social costs. Proponents of this line of reasoning sometimes argue that taking the time to understand the ethical context of a decision is simply wasted time. Several of our students last year voiced versions of this reasoning.

This year, however, the conversation had completely changed, and we did not hear this line of reasoning at all. My hypothesis for why: all of our students understood that we stand on a fulcrum of human history where we are deciding whether generative AI will support or harm human flourishing. The old logic of dealing with externalities after we launch products around the world was out of the question. Each of my 64 students believed that technologists should bring ethical reasoning into every stage of product development so that, when products launch, they can feel confident that they will help humanity flourish.

This year’s students, rather than questioning the worthiness of considering ethics, were instead asking themselves, “what should I do?” Based on our class discussions and an amazing list of guest speakers (some of whom will come on the podcast!) students started grappling with questions of what they would do when presented with what seem like awful choices. Some students advocated for more of a hands-off approach; others were more risk-averse. However, rather than gravitating towards political corners or doubling down based on previously-held ideological beliefs, students were open to changing their minds and revising their initial opinions in an area where there is no such thing as “the right answer.” Contrary to the narrative that today’s students are not open to debate, my students did an exceptional job of listening to views that made them uncomfortable and wrestling with hard topics.

Beyond the question of “what should I do,” students were also asking “what can I do?” In other words, they believe that their perspectives as ethical leaders will be valuable in the organizations they will join when they graduate. We had great class discussions about how leaders can accrue influence in companies so that stakeholders listen when they present ethical arguments. We also discussed how leaders should bring the right amount of diversity into the room to consider every angle of a debate. Moreover, we discussed how leaders should ask specific questions of data scientists, engineers, and legal teams to make sure they understand how the technical elements of a decision interact with the human beings that use products.

Another question that came up in class: what role should government play here? Through conversations with leaders across large social media platforms and one of the top AI regulators in the White House, students realized that the US government, no matter how well-intentioned, will lag corporations as they build products with an exponentially evolving technology at a global scale. Students realized that although they could actively include government input as a stakeholder and influence elected officials as citizens, the responsibility, at least in the short term, will be on them as leaders in the tech industry to build the future they want to see.

In our final class, we asked the students to imagine what their ideal lives would look like in twenty years and what would have to be true about technology to bring their ideal lives into being. Then, we asked them to think about what technology will look like if it continues on its current path. Students saw obvious disconnects between the world they wanted and a world where ethical leaders do not step up to the plate.

The way the students responded to the gap between those potential realities — their call to action — gives me more hope than ever about our technological future. Rather than simply accepting an onward march of technology bound by the incentives of the venture capital industry and the opinions of a few CEOs, my students listed the ways, however incremental, that they could bend the world towards their ideals. Across ideological, racial, and socioeconomic lines, they simulated, in class, precisely the conversations business leaders ought to be having with each other about our collective future. Through their discussions, they realized that an AI-driven future ought to support a new golden age of humanity, and that it was in their power to bring that future into being.

As Bill Gates famously said, “We always overestimate the change that will occur in the next two years and underestimate the change that will occur in the next ten.” The world in ten years undoubtedly will benefit from the ethical decisions these Darden students will make at their companies upon graduation.